Note

Go to the end to download the full example as a Python script or as a Jupyter notebook..

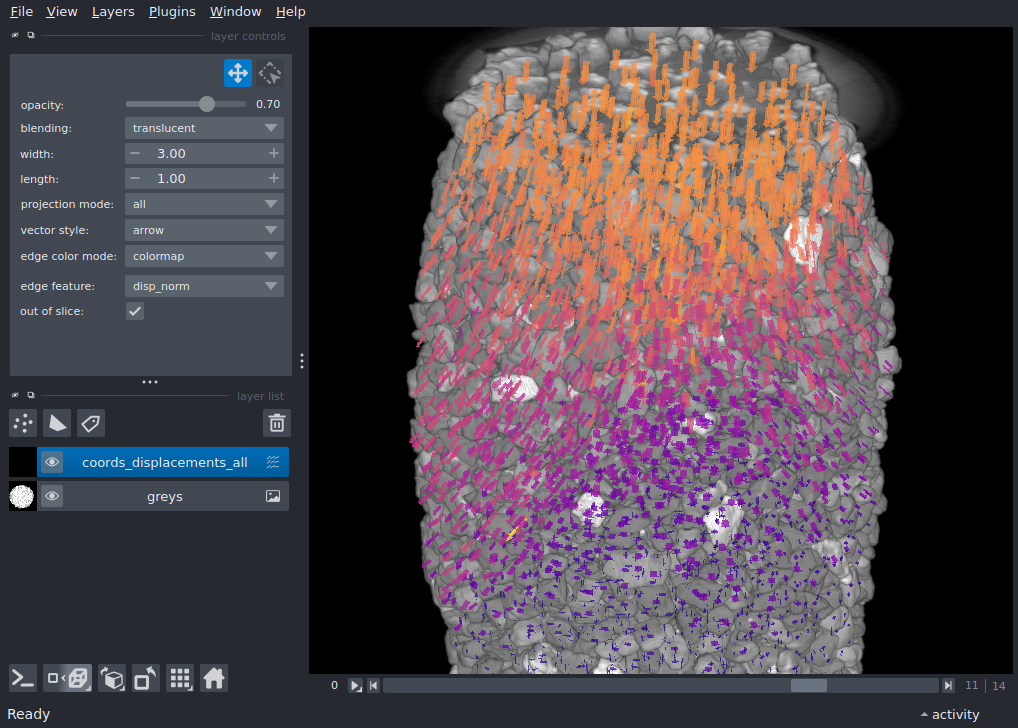

3D vector field and image across time#

This example is from a mechanical test on a granular material, where multiple 3D volume have been acquired using x-ray tomography during axial loading. We have used [spam](https://www.spam-project.dev/) to label individual particles (with watershed and some tidying up manually), and these particles are tracked through time with 3D “discrete” volume correlation incrementally: t0 → t1 and then t1 → t2. Although we’re also measuring rotations (and strains), but here we’re interested to visualise the displacement field on top of the image to spot tracking errors.

import numpy as np

import pooch

import tifffile

import napari

Input data#

- Input data are therefore:

A series of 3D greyscale images

A series of measured transformations between subsequent pairs of images

We also have consistent labels through time which could also be visualised but are not here

Let’s download it!

grey_files = sorted(pooch.retrieve(

"doi:10.5281/zenodo.17668709/grey.zip",

known_hash="md5:760be2bad68366872111410776563760",

processor=pooch.Unzip(),

progressbar=True

))

# Load individual 3D images as a 4D with a list comprehension, skipping last one

# result is a T, Z, Y, X 16-bit array

greys = np.array([tifffile.imread(grey_file) for grey_file in grey_files[0:-1]])

# load incremental TSV tracking files from spam-ddic, [::2] is to skip VTK files also in folder

tracking_files = sorted(pooch.retrieve(

"doi:10.5281/zenodo.17668709/ddic.zip",

known_hash="md5:2d7c6a052f53b4a827ff4e4585644fac",

processor=pooch.Unzip(),

progressbar=True

))[::2]

Downloading...

From (original): https://drive.google.com/uc?id=1UZEoOtHMMeJMXVoWr6IZraZnvQe8ExNJ

From (redirected): https://drive.google.com/uc?id=1UZEoOtHMMeJMXVoWr6IZraZnvQe8ExNJ&confirm=t&uuid=cdb35ac3-92bc-4b43-b1fc-cd8c57890831

To: /home/runner/work/docs/docs/.cache/pooch/tmp27jlra74

0%| | 0.00/549M [00:00<?, ?B/s]

1%| | 6.82M/549M [00:00<00:09, 55.7MB/s]

2%|▏ | 12.6M/549M [00:00<00:13, 40.7MB/s]

4%|▍ | 23.6M/549M [00:00<00:10, 49.4MB/s]

6%|▌ | 33.6M/549M [00:00<00:08, 63.2MB/s]

7%|▋ | 40.9M/549M [00:00<00:09, 53.7MB/s]

9%|▉ | 51.9M/549M [00:00<00:07, 67.4MB/s]

11%|█ | 59.8M/549M [00:01<00:08, 58.2MB/s]

13%|█▎ | 69.7M/549M [00:01<00:07, 64.1MB/s]

14%|█▍ | 78.1M/549M [00:01<00:07, 66.2MB/s]

16%|█▌ | 86.5M/549M [00:01<00:07, 60.6MB/s]

17%|█▋ | 94.9M/549M [00:01<00:06, 65.7MB/s]

19%|█▉ | 103M/549M [00:01<00:09, 48.4MB/s]

22%|██▏ | 120M/549M [00:02<00:08, 52.9MB/s]

25%|██▍ | 135M/549M [00:02<00:06, 68.4MB/s]

26%|██▌ | 143M/549M [00:02<00:07, 56.4MB/s]

30%|██▉ | 162M/549M [00:02<00:05, 69.1MB/s]

32%|███▏ | 177M/549M [00:02<00:06, 59.3MB/s]

36%|███▌ | 196M/549M [00:03<00:05, 65.8MB/s]

39%|███▉ | 216M/549M [00:03<00:03, 87.1MB/s]

41%|████▏ | 228M/549M [00:03<00:05, 59.9MB/s]

44%|████▍ | 244M/549M [00:03<00:04, 63.6MB/s]

48%|████▊ | 261M/549M [00:04<00:04, 71.6MB/s]

51%|█████ | 278M/549M [00:04<00:03, 88.7MB/s]

53%|█████▎ | 290M/549M [00:04<00:03, 80.5MB/s]

56%|█████▌ | 305M/549M [00:04<00:02, 92.8MB/s]

58%|█████▊ | 316M/549M [00:04<00:02, 83.8MB/s]

60%|██████ | 330M/549M [00:04<00:02, 89.4MB/s]

63%|██████▎ | 343M/549M [00:04<00:02, 98.7MB/s]

65%|██████▍ | 354M/549M [00:05<00:02, 88.0MB/s]

68%|██████▊ | 374M/549M [00:05<00:01, 111MB/s]

70%|███████ | 386M/549M [00:05<00:01, 97.5MB/s]

74%|███████▍ | 405M/549M [00:05<00:01, 104MB/s]

76%|███████▌ | 417M/549M [00:05<00:01, 99.0MB/s]

79%|███████▉ | 435M/549M [00:05<00:01, 108MB/s]

81%|████████▏ | 446M/549M [00:05<00:01, 101MB/s]

84%|████████▎ | 458M/549M [00:06<00:00, 105MB/s]

86%|████████▋ | 474M/549M [00:06<00:00, 118MB/s]

89%|████████▊ | 487M/549M [00:06<00:01, 59.1MB/s]

92%|█████████▏| 506M/549M [00:06<00:00, 75.2MB/s]

94%|█████████▍| 517M/549M [00:06<00:00, 79.4MB/s]

97%|█████████▋| 533M/549M [00:06<00:00, 95.0MB/s]

99%|█████████▉| 545M/549M [00:07<00:00, 92.8MB/s]

100%|██████████| 549M/549M [00:07<00:00, 76.9MB/s]

Downloading...

From: https://drive.google.com/uc?id=1-AAkBUXykxXG3Ve20jPk43co-m3f9ZWB

To: /home/runner/work/docs/docs/.cache/pooch/tmp9ummwdoq

0%| | 0.00/6.72M [00:00<?, ?B/s]

23%|██▎ | 1.57M/6.72M [00:00<00:00, 14.8MB/s]

70%|███████ | 4.72M/6.72M [00:00<00:00, 23.8MB/s]

100%|██████████| 6.72M/6.72M [00:00<00:00, 29.4MB/s]

Collect data together for napari#

We will loop through all the images in order to prepare the necessary data structure for napari

These variables are going to contain coordinates, displacements and lengths (for colouring vectors) for all timesteps

coords_all = []

disps_all = []

lengths_all = []

for t, tracking_file in enumerate(tracking_files):

# load the indicator for convergence

returnStatus = np.genfromtxt(tracking_file, skip_header=1, usecols=(19))

# Load coords and displacements, keeping only converged results (returnStatus==2)

coords = np.genfromtxt(tracking_file, skip_header=1, usecols=(1,2,3))[returnStatus==2]

disps = np.genfromtxt(tracking_file, skip_header=1, usecols=(4,5,6))[returnStatus==2]

# Compute lengths in order to colour vectors

lengths = np.linalg.norm(disps, axis=1)

# Prepend an extra dimension to coordinates to place them in time, and fill it with the incremental t

coords = np.hstack([np.ones((coords.shape[0],1))*t, coords])

# Preprend zeros to the displacements (the "end" of the vector), since they do not displace through time

disps = np.hstack([np.zeros((disps.shape[0],1)), disps])

# Add to lists

coords_all.append(coords)

disps_all.append(disps)

lengths_all.append(lengths)

# Concatenate into arrays

coords_all = np.concatenate(coords_all)

disps_all = np.concatenate(disps_all)

lengths_all = np.concatenate(lengths_all)

# Stack this into an array of size N (individual points) x 2 (vector start and length) x 4 (tzyx)

coords_displacements_all = np.stack([coords_all, disps_all], axis=1)

viewer = napari.Viewer(ndisplay=3)

viewer.add_image(

greys,

contrast_limits=[15000, 50000],

rendering="attenuated_mip",

attenuation=0.333

)

viewer.add_vectors(

coords_displacements_all,

vector_style='arrow',

length=1,

properties={'disp_norm': lengths_all},

edge_colormap='plasma',

edge_width=3,

out_of_slice_display=True,

)

viewer.camera.angles = (2,-11,-23.5)

viewer.camera.orientation = ('away','up','right')

viewer.dims.current_step = (11,199, 124, 124)

if __name__ == '__main__':

napari.run()

Total running time of the script: (0 minutes 25.797 seconds)